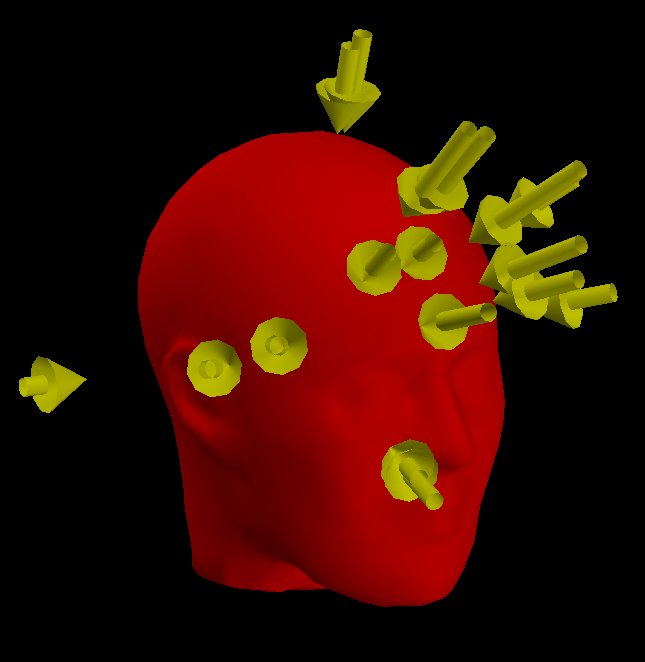

Database Synchronization for the Simbex Head Impact Telemetry SystemBreck CarterIntroductionSimbex faced several database synchronization challenges in a research and development environment where the software continued to change and evolve. This article discusses two of those challenges: central control over schema changes that must be pushed out to remote databases, and central diagnosis of referential integrity violations that may occur on those remote databases. Several features of the SQL Anywhere database and MobiLink synchronization software were used to solve these challenges, including "sp_hook" stored procedures for customizing the synchronization process, the xp_read_file function for loading files into blob columns, the EXCEPTION clause to perform try-catch error handling of SQL statements, the STRING function for the dynamic construction of SQL statements to be run via EXECUTE IMMEDIATE, and the LIST aggregate function for constructing strings out of result sets. Overview of the Simbex HIT SystemOn April 28, 2002, at a NASCAR race in Fontana California, Dale Earnhardt Jr. hit a concrete wall at 130 miles per hour. If he had been wearing a Simbex-equipped helmet, Earnhardt might not have been able to hide the severity of the head injury he received that day. Instead, Earnhardt suffered in secrecy, and he did not reveal the full extent of his injury until several months later. Simbex is a New Hampshire-based research and development company specializing in biomechanical feedback systems. Their premier product is the Head Impact Telemetry System, a real-time hardware and software system designed to measure and record blows to the head. Initially developed for use in college and high school football, the HIT System allows sideline staff to monitor the impact history for all players simultaneously, as well as providing pager alerts whenever a player receives a serious impact or series of impacts. Impacts are measured by accelerometers in each helmet, visible as the small round knobs in Figure 1. This data is sent wirelessly to the HIT System sideline controller shown in Figure 2. A laptop computer is used to store, analyze and display the data locally; Figure 3 shows one of the displays where the impact history for one player is represented graphically. Figure 1: HIT System hardware mounted in a football helmet Figure 2: HIT System sideline controller at a Virginia Tech football game Figure 3: HIT Impact Analyzer screen display The SQL Anywhere relational database system from the iAnywhere Solutions subsidiary of Sybase is used to store the impact data in the HIT System sideline computer. As well as being analyzed and displayed locally, that data is also uploaded to a central SQL Anywhere database at Simbex headquarters for further analysis and study. The upload is handled by the MobiLink synchronization software that ships with SQL Anywhere... and I am proud to say I played a small role in the development of the HIT System by providing the MobiLink synchronization setup. Synchronizing Schema ChangesBeing a true research and development company, Simbex violates one of the simplest rules-of-thumb in database synchronization: If you don't want trouble, don't change the schema. A schema change is hard enough with one database, but with two or 10 or 100 databases in active use, it's an administrative nightmare. In a research environment, it's also a common occurrence: nothing stays the same for long. To help reduce the administrative pain, central control of schema changes was built in to the HIT System synchronization setup using a new table called dbmlsync_sql_download shown in Figure 4 (with "dbmlsync" referring to the MobiLink client component dbmlsync.exe). Figure 4: The SQL download tableCREATE TABLE dbmlsync_sql_download ( sql_download_id UNSIGNED BIGINT NOT NULL DEFAULT autoincrement, db_id INTEGER NOT NULL, sql LONG VARCHAR NOT NULL, modification_date TIMESTAMP NOT NULL DEFAULT timestamp, PRIMARY KEY ( sql_download_id ) ); Rows in dbmlsync_sql_download are inserted into the central or "consolidated" database at Simbex and downloaded to the remote sideline controller databases. The columns are filled as follows: sql_download_id is automatically incremented to uniquely identify each row, and it also specifies the processing order if two SQL scripts in this table apply to the same remote database. A two-step process is used to create rows in dbmlsync_sql_download. First, a text file is coded containing the SQL script, and second, that text file is loaded into a new row in dbmlsync_sql_download. Figure 5 shows an example of a SQL script used to add a new column to a table on the remote database; in this example, the file name is alter_remote_version_v2m.sql.

Figure 5: A sample SQL script to be downloaded

The first ALTER TABLE command in Figure 5 adds the new "type" column to the end of each row the sbx_activity table, and initializes that column to 0 for all existing rows. The next two commands are MobiLink overhead; they are necessary to keep the MobiLink synchronization setup up to date. The ALTER PUBLICATION command tells MobiLink to include the new type column in the next synchronization. The ALTER SYNCHRONIZATION SUBSCRIPTION command tells MobiLink that a different set of scripts on the consolidated database, identified as version "PC_v2m", must now be used when synchronizing with this remote database. The special "--)RUN" comment lines in Figure 5 serve to identify commands that are to be executed separately on the remote database; this process will be handled by code shown later in this article. Testing schema changes ahead of time is extremely important in a synchronizing environment; even the smallest mistake can bring the entire setup to a halt when the synchronization software detects a mismatch or when the database software detects an integrity violation. Gathering separate commands into a single SQL script makes coding and testing a schema change easier, as opposed to storing each command as a separate entry. Once the SQL script file was created, it was loaded into the consolidated database using the INSERT shown in Figure 6. In this example the db_id of 2 identifies which sideline controller database the SQL is going to, and the SQL Anywhere function xp_read_file is called to convert the script file alter_remote_version_v2m.sql into a LONG VARCHAR string. Figure 6: Loading the SQL script into the download tableFigure 7 shows the download_cursor script that the MobiLink server runs on the consolidated database to determine which rows in dbmlsync_sql_download are to be downloaded to which remote database.INSERT dbmlsync_sql_download ( db_id, sql ) VALUES ( 2, xp_read_file ( 'alter_remote_version_v2m.sql' ) )

Figure 7: Download_cursor script for the SQL download table

The WHERE clause contains two "?" placeholders which are filled in by MobiLink at run time. The first "?" is replaced by the date and time of the previous successful download, and that is used to choose rows for this download that have been inserted or updated since that point. The second "?" is the "MobiLink user name" which serves to uniquely identify the remote database that is being synchronized. In the case of Simbex, the db_id values 1, 2, 3 are also used as MobiLink user names for simplicity's sake. Figure 8 shows the procedure that executes the SQL scripts when they reach the remote database. It is a user-written procedure, but because it has been given the special name "sp_hook_dbmlsync_schema_upgrade" the MobiLink client component dbmlsync.exe will automatically call this procedure as soon as all upload and download processing is complete. MobiLink recognizes several different "sp_hook" procedure names like sp_hook_dbmlsync_schema_upgrade, and if you code them they will be called. This one is designed for processing schema changes like our example, and it offers several advantages over a solution you might create to run outside the MobiLink synchronization process: The sp_hook_dbmlsync_schema_upgrade procedure is called automatically; you don't have to build any special logic on the remote database side to determine when a schema change needs to be applied. The procedure in Figure 8 is driven by a cursor fetch loop using the streamlined SQL Anywhere FOR statement. This cursor retrieves all rows in the dbmlsync_sql_download table that have not yet been processed, and that fact is determined by a NOT EXISTS subquery on the dbmlsync_sql_download_ack table (which will be described later).

Figure 8: "Hook" procedure to run the SQL script

Inside the cursor fetch FOR loop in Figure 8, a nested WHILE loop breaks the SQL script into the individual parts separated by the special RUN comment marker, and inside that WHILE loop each SQL part is processed step-by-step: Step 1 extracts the SQL part from the larger string. Figure 9 shows the dbmlsync_sql_download_ack table that is used in Step 3 described above. Figure 9: The SQL download acknowledgement tableCREATE TABLE dbmlsync_sql_download_ack ( sql_download_id UNSIGNED BIGINT NOT NULL, sql_part_number UNSIGNED INT NOT NULL, db_id INTEGER NOT NULL, sql_part LONG VARCHAR NOT NULL, sql_part_sqlstate VARCHAR ( 5 ) NOT NULL, sql_part_errormsg VARCHAR ( 32767 ) NOT NULL DEFAULT '', creation_date TIMESTAMP NULL DEFAULT current timestamp, PRIMARY KEY ( sql_download_id, sql_part_number ) ); The dbmlsync_sql_download_ack table contains the sql_download_id and db_id values from the corresponding rows in dbmlsync_sql_download, plus these extra columns:

Figure 10 shows the upload_insert script that the MobiLink server runs on the consolidated database whenever a new row in dbmlsync_sql_download_ack is uploaded. This upload is a very important part of the schema change process because it allows changes to be tracked and checked centrally, with no DBA present at the remote location.

Figure 10: Upload_insert script for the acknowledgment table

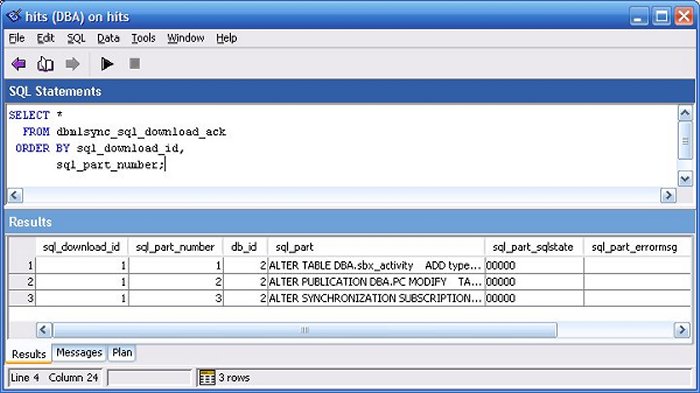

Figure 11 shows what the dbmlsync_sql_download_ack table looks like on the consolidated database after the SQL script shown in Figure 5 has been processed on a remote database and the acknowledgement rows uploaded in return. In this case everything worked, no error messages. Figure 11: Confirming the changes with dbmlsync_sql_download_ack Dealing With Referential Integrity ViolationsOne of the consequences of making schema and other changes in an evolving research and development environment is the inevitable introduction of program and design errors, including referential integrity violations that occur only on the remote database. This can happen because the remote database holds a subset of the rows that exist on the consolidated; a critical row may be present on the consolidated but missing on the remote, resulting in a referential integrity or foreign key violation. The MobiLink synchronization software is designed to be robust and fault tolerant in practice, and to be easy to administer without DBAs at remote sites. One of results of this design goal is that MobiLink, by default, silently handles referential integrity violations that occur on the remote database while data is being downloaded. It does this by deleting any rows that get in the way of referential integrity. Figure 12 shows an example of this behavior. The "verbose diagnostic logging" option has been turned on to reveal that two referential integrity violations were detected on the remote database during the download, and that the offending rows in sbx_data_access and sbx_beeper were deleted.

Figure 12: MobiLink resolves RI violations on the remote database

Silently handling RI violations isn't always desirable. In fact, even logging the problem at the remote site might not be good enough when administration is being carried out centrally, at the consolidated database. At Simbex, this challenge was met by implementing the RI violation tracking table called dbmlsync_ri_violation shown in Figure 13. MobiLink was allowed to continue resolving the violations, but the synchronization process was customized to record each violation in this tracking table and to upload that information to the consolidated database.

Figure 13: The RI violation tracking table

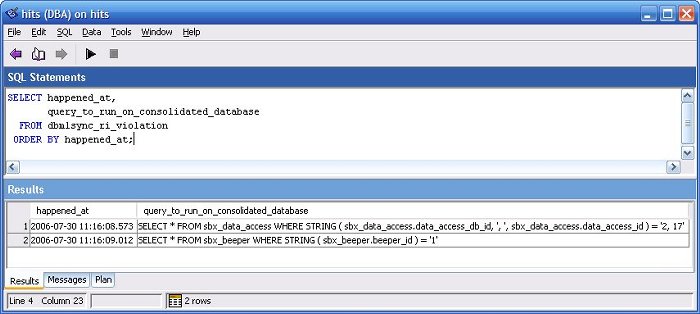

Rows in this tracking table answer two important questions: Which RI constraints were violated, and which rows were the troublemakers? The first question is answered by the foreign_key_table_name, primary_key_table_name and role_name columns. The second (and often most important) question is answered by the query_to_run_on_consolidated_database column which contains actual SELECT statements that can be run on the consolidated database to display the rows that caused problems on the remote database. For the violations shown earlier in Figure 12, the values in query_to_run_on_consolidated_database look like this: SELECT * FROM sbx_data_access WHERE STRING ( sbx_data_access.data_access_db_id, ', ', sbx_data_access.data_access_id ) = '2, 17' SELECT * FROM sbx_beeper WHERE STRING ( sbx_beeper.beeper_id ) = '1' Here are the other columns in the tracking table:

Rows are inserted in the tracking table by another "hook" procedure, called sp_hook_dbmlsync_download_log_ri_violation, shown in Figure 14. When a procedure with this name exists in the remote database, the MobiLink client component dbmlsync.exe calls it whenever an RI violation is detected.

Figure 14: "Hook" procedure to record RI violations

The procedure in Figure 14 is constructed to be schema-independent; in other words, it does not depend on any special knowledge of the Simbex database design, so it should not be affected by changes to that design. The processing is done step-by-step: Step 1 handles that fact that MobiLink passes parameters to the hook procedures indirectly, via a special temporary table called #hook_dict. Rows in this table contain parameter name and value pairs, with the parameter names depending on which hook procedure is being executed. In this case, the parameters of interest are the parent and child table names and the foreign key role or constraint name involved in the RI violation.Figure 15 shows the final result of all the work performed by the sp_hook_dbmlsync_download_log_ri_violation procedure. The SELECT statements uploaded to the consolidated database that can be used to display the child table rows that caused the referential integrity violations on the remote database. Figure 15: Using dbmlsync_ri_violation to investigate a problem ConclusionMy experience on the Simbex HIT System project has reinforced my conviction that central administration of the database synchronization process is critically important, simply because there will never be enough database administrators for all the remote sites where databases are deployed. This will remain true even though the HIT System is emerging from its R&D origins as a commercial product, and is being marketed by sports equipment giant Riddell as the "Riddell Sideline Response System". Synchronization at the database row level, rather than application message level, is very helpful in reducing application complexity and the errors that result. Nevertheless, stuff happens, even in production, and features which support centralized control over diagnosis and repair are invaluable. Breck Carter is principal consultant at RisingRoad Professional Services, providing consulting and support for SQL Anywhere databases and MobiLink synchronization with Oracle, DB2, SQL Server and SQL Anywhere. He is also author of SQL Anywhere Studio 9 Developer's Guide, now available in English, Japanese and Chinese; see here for more information. Breck can be reached at breck.carter@risingroad.com. |

||

|

|

||

|

This page was last updated on January 25, 2008. |

||